- Customer Stories

- /

- AI-Assisted Screening Tool for Systematic Literature Reviews

AI-Assisted Screening Tool for Systematic Literature Reviews

Using a scalable, cloud-native architecture and modern frontend/backend stack.

Reduction in manual work

Months saved per large review

Saved per review

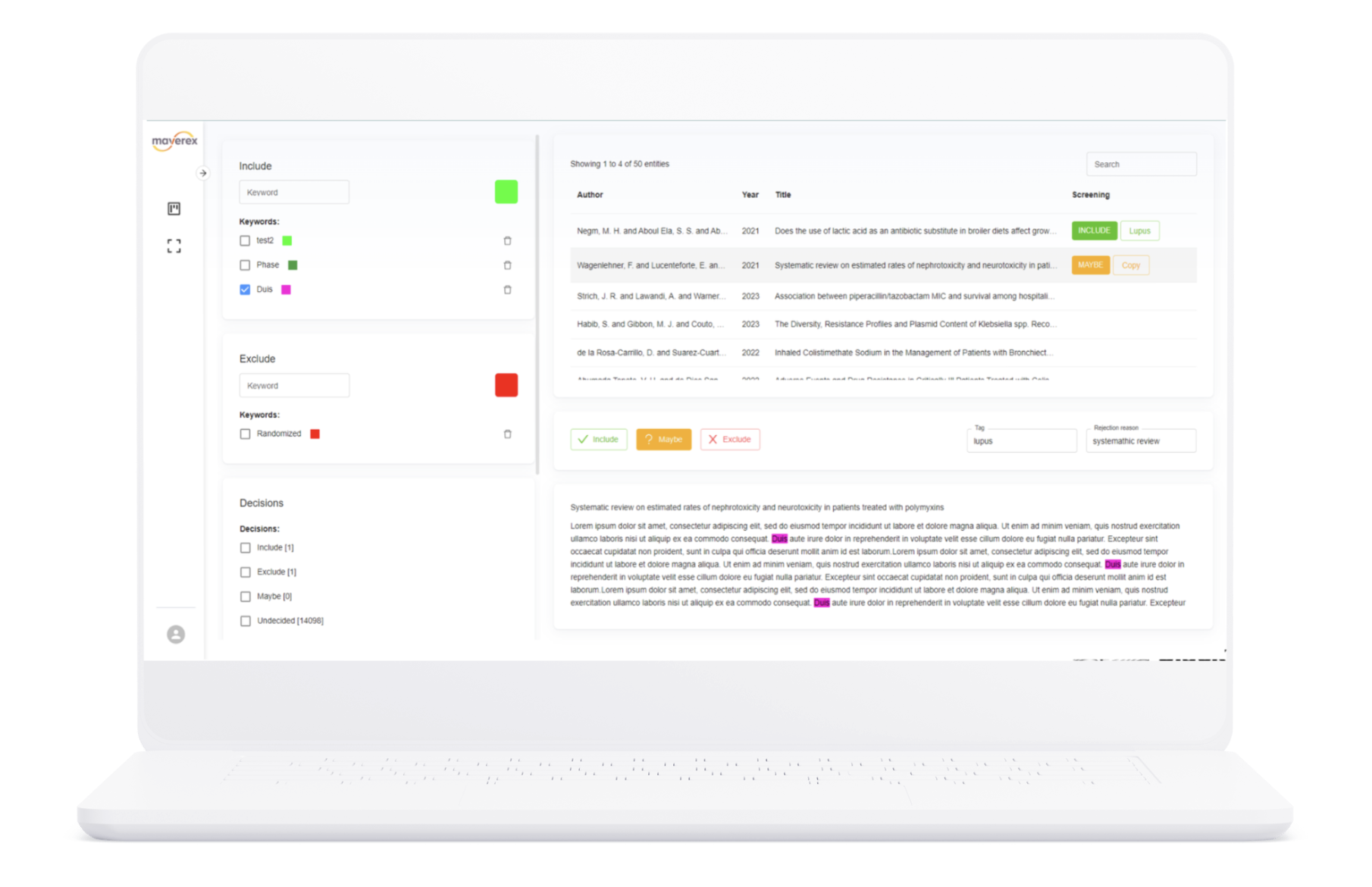

Rebuilding and enhancing an R Shiny-based tool with scalable, collaborative, AI-assisted screening for literature reviews.

Pharma & Biotech

Industry

London

Location

AI Ops & MLOps Pipelines, Cloud Architecture, AI/ML Solutions, UI/UX Design

Services

Systematic reviews are essential for health technology assessments, real-world evidence (RWE) generation, and regulatory submissions — but traditional workflows are slow, inconsistent, and impossible to scale.

See what we can do for youSolution

We rebuilt the Screener tool from scratch using a scalable, cloud-native architecture and modern frontend/backend stack.

Let’s talk about what’s possible

To deliver a AI-Assisted Screening Tool, Blackthorn AI applied:

Project duration

01–02 Weeks

We analyzed the legacy R Shiny tool, identified major usability gaps, and documented over 20 functional requirements covering inputs, screening logic, tagging workflows, and export structure.

03–04 Weeks

We designed a modular, scalable architecture using Node.js, MongoDB, and React, and defined the collaboration model with role-based permissions and real-time interaction support.

05-10 Weeks

We rebuilt all major screening functionalities including tagging, inclusion/exclusion flows, conflict resolution, and batch abstract uploads, ensuring smooth handling of datasets up to 15,000 records.

11–13 Weeks

We implemented grouped keyword logic (AND/OR), blind/unblind workflows for reviewer consensus, and structured the tagging layer for future integration of ML-driven decision suggestions.

14–15 Weeks

We completed end-to-end testing on real datasets, onboarded the client team, and delivered a production-grade MVP exceeding the original feature set and usability of the legacy system.

Team Size

Delivering Impact

>80%

Reduction in manual workThe AI-assisted screening tool automated the bulk of repetitive literature review tasks such as initial screening, deduplication, and data tagging.

3–6 months

Saved per large reviewAuto-tagging, filtering, and highlight logic reduced the need for manual decision pre-work, especially for low-relevance exclusions.

$40,000–$80,000

Saved per reviewBy minimizing the need for large research teams and external outsourcing, each large-scale review achieved an average cost saving of $40K–$80K.