AI Protein Design | De Novo Protein Design with AI – Blackthorn AI

AI protein design for creating and optimizing de novo proteins using advanced machine learning models for biotech and life science applications

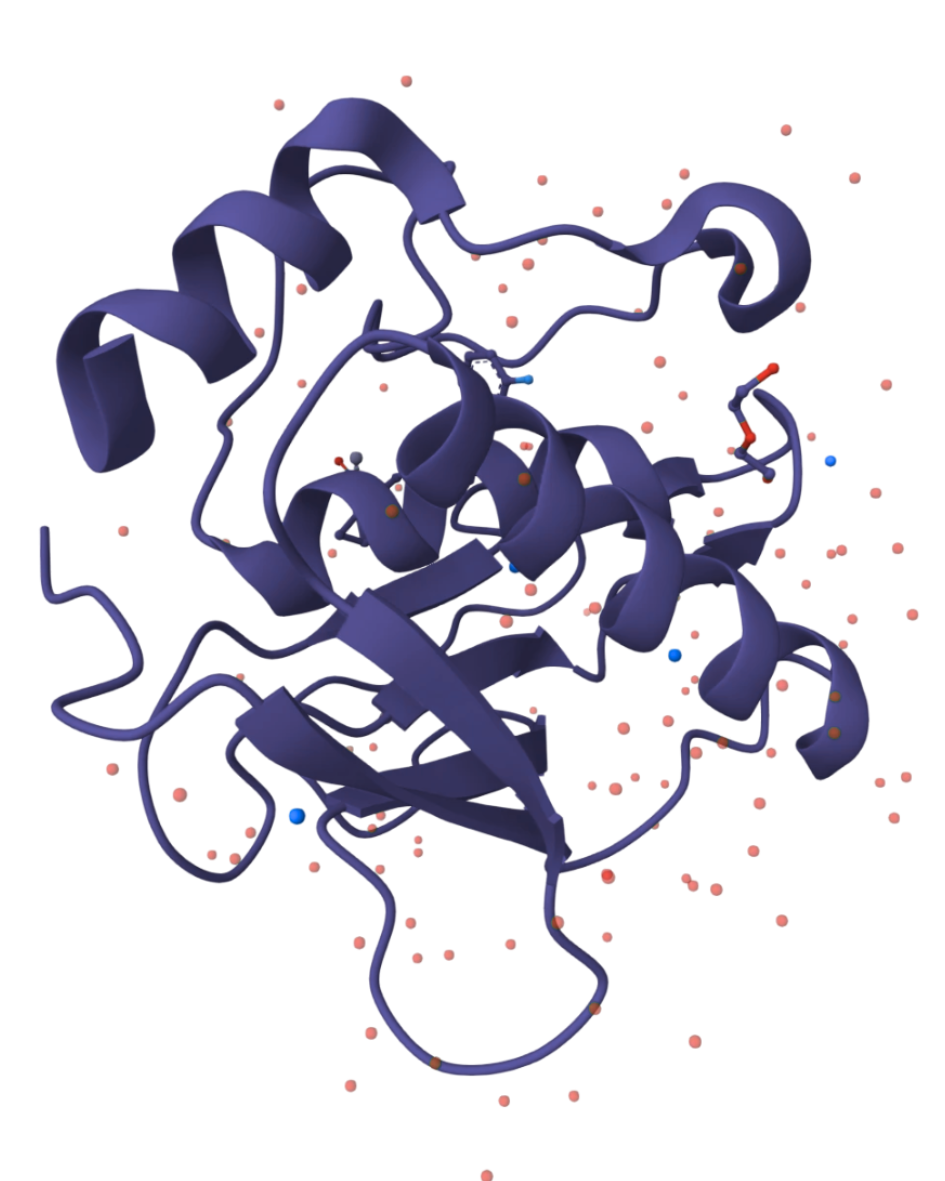

AI-Powered Protein Design Pipelines

Learn moreGenerative AI for Novel Protein Designs

Generative architectures (diffusion, transformer-based) for de-novo sequence creation, scaffold repurposing, and expansion into unexplored molecular space.

ML Models for Structure & Function Prediction

High-accuracy structure, stability, and developability predictions (AlphaFold-class, Boltz-family, OpenFold3-grade models) tailored to your therapeutic modality: antibodies, enzymes, cytokines, fusion proteins, scaffolds.

Protein–Protein Interaction (PPI) Modeling

Deep-learning models for binding affinity, interaction hotspots, conformational flexibility, and docking prioritization – ideal for antibody design, biologics, and synthetic binding proteins.

Sequence Optimization & In-Silico Mutagenesis

AI models generate and rank variants by potency, stability, immunogenicity, and manufacturability – reducing experimental rounds and reagent costs.

Developability & Liabilities Prediction

End-to-end assessment of aggregation, toxicity, immunogenicity, solubility, PTMs, and expression challenges – minimizing downstream failure.

Integrated Wet-Lab Support Pipelines

End-to-end systems that feed results back into your ELN/LIMS, support iterative design–test cycles, and cut experimental overhead.

Key R&D Challenges in Protein Design

We Solve with AI

Higher hit probability per experimental round

-

Reducing experimental burden by prioritizing high-likelihood functional variants before synthesis

Avoiding constructs that misfold or fail purification

-

Identifying stable, soluble, and expressible protein designs prior to wet-lab validation

Guiding rational design of modulators and biologics

-

Mapping and prioritizing therapeutically relevant PPI interfaces for inhibition or stabilization

Lowering manufacturing and formulation risks

-

Detecting early signs of aggregation, degradation pathways, or structural instability

De-risking IND-enabling work

-

Predicting immunogenic and liability-prone sequence regions before preclinical studies

Reducing downstream liabilities early

-

Ranking antibody or binder variants using joint affinity, specificity, and developability scores

Faster progression to IND candidates

-

Accelerating lead optimization by modeling potency, developability, and manufacturability simultaneously

Have a specific AI protein design or PPI modeling challenge in mind?

Share your target, interaction context, and available data. We’ll evaluate whether AI-driven protein design, PPI modeling, or hybrid physics-ML methods are applicable — and recommend an approach grounded in what can be modeled reliably.

Get a consultationBiotech

Industry

USA

Location

AI Ops & MLOps, Cloud Architecture, UI/UX Design

Services

$200,000 to $999,999

Budget

The team surpassed expectations on timelines, provided much needed guidance and overall input on design, all while operating with a high degree of autonomy.

Carl Kaub

Vice President of Chemistry at HTG Molecular DiagnosticPractical Impact of AI-Driven Protein Design

Book Strategy Call10×

Larger Variant Screening SpaceAI expands search into billions of virtual sequences, uncovering high-potency candidates unreachable through traditional directed evolution or rational design alone.

70%

Fewer Wet-Lab IterationsPredictive models surface top variants early, allowing teams to order fewer constructs while achieving higher success rates in the first experimental round.

2–3×

Higher Hit QualityPPI and stability models improve potency, specificity, and developability – drastically reducing downstream attrition.

>95%

Prediction AccuracySequence-to-structure and PPI predictions optimized for biologics lead to more reliable ranking and fewer dead-end constructs.

5×

Faster Lead OptimizationFrom target → candidate → optimized variant in weeks, not quarters — accelerating path to IND-enabling studies.

Let’s build your AI advantage

Whether you’re prototyping a molecule scoring system or looking to automate your clinical ops – we’ll help you turn your biotech data into competitive edge.

Book a Meeting